1 kubeadm创建集群

1.1 安装kubeadm

-

一台兼容的 Linux 主机。Kubernetes 项目为基于 Debian 和 Red Hat 的 Linux 发行版以及一些不提供包管理器的发行版提供通用的指令

-

每台机器 2 GB 或更多的 RAM (如果少于这个数字将会影响你应用的运行内存)

-

2 CPU 核或更多

-

集群中的所有机器的网络彼此均能相互连接(公网和内网都可以)

-

- 设置防火墙放行规则

-

节点之中不可以有重复的主机名、MAC 地址或 product_uuid。请参见这里了解更多详细信息。

-

- 设置不同hostname

-

开启机器上的某些端口。请参见这里 了解更多详细信息。

-

- 内网互信

-

禁用交换分区。为了保证 kubelet 正常工作,你 必须 禁用交换分区。

-

- 永久关闭

1.2 为所有机器执行下面操作

#各个机器设置自己的域名

hostnamectl set-hostname xxxx

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

1.3 安装kubelet、kubeadm、kubectl

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

1.4 下载各个机器需要的镜像

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

1.5 初始化主节点

#所有机器添加master域名映射,以下需要修改为自己的

echo "192.168.59.168 cluster-endpoint" >> /etc/hosts

#主节点初始化

kubeadm init \

--apiserver-advertise-address=192.168.59.168 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=172.168.0.0/16

#所有网络范围不重叠

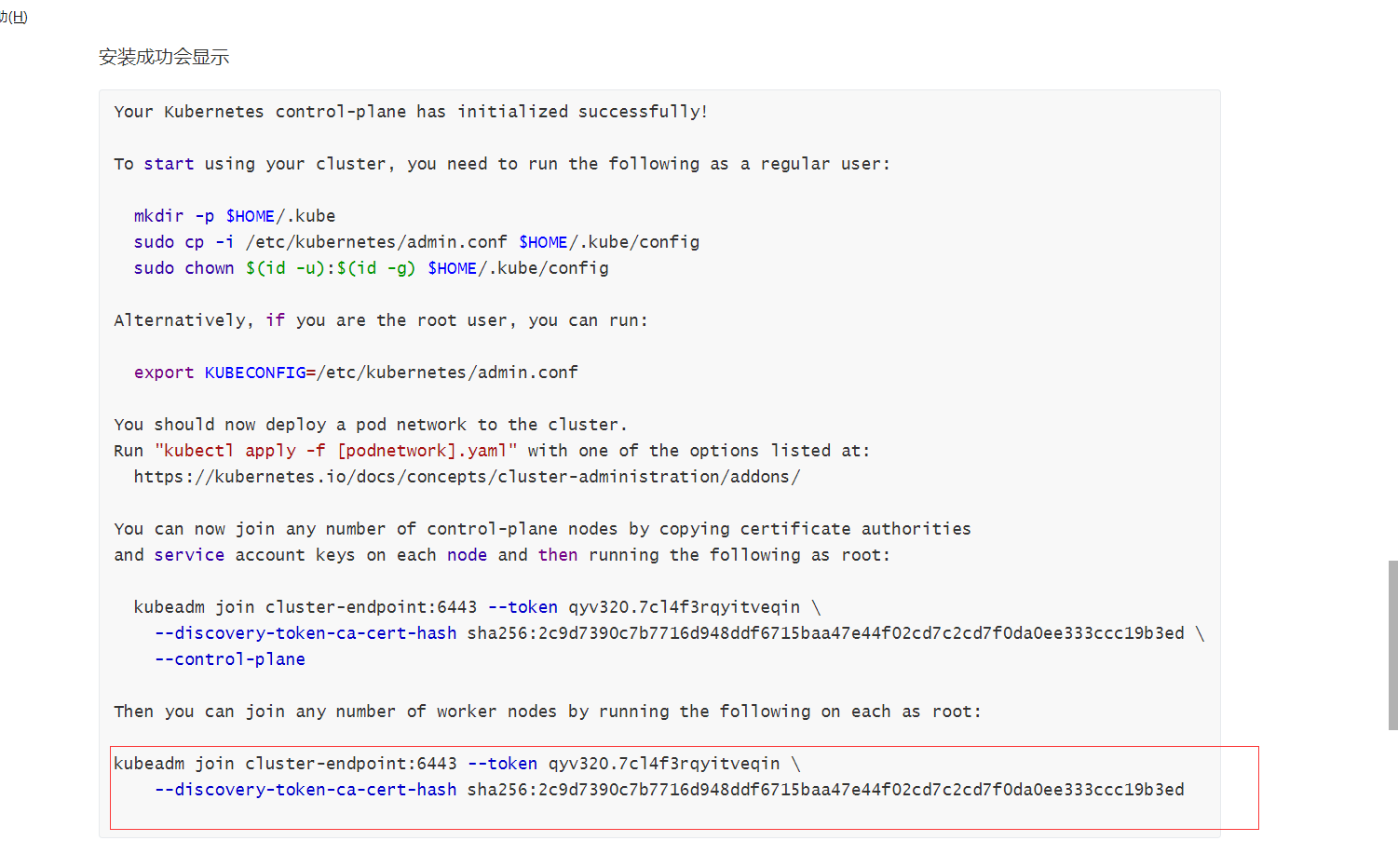

安装成功会显示

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token qyv320.7cl4f3rqyitveqin \

--discovery-token-ca-cert-hash sha256:2c9d7390c7b7716d948ddf6715baa47e44f02cd7c2cd7f0da0ee333ccc19b3ed \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token qyv320.7cl4f3rqyitveqin \

--discovery-token-ca-cert-hash sha256:2c9d7390c7b7716d948ddf6715baa47e44f02cd7c2cd7f0da0ee333ccc19b3ed

1.6 主节点执行上面展示的命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

1.7 部署网络插件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

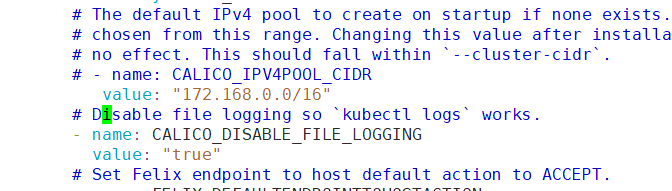

1.8 修改配置

如果前面修改了地址,那么这个地址也需要进行修改

把原来#192.168.0.0/16修改成下面这个

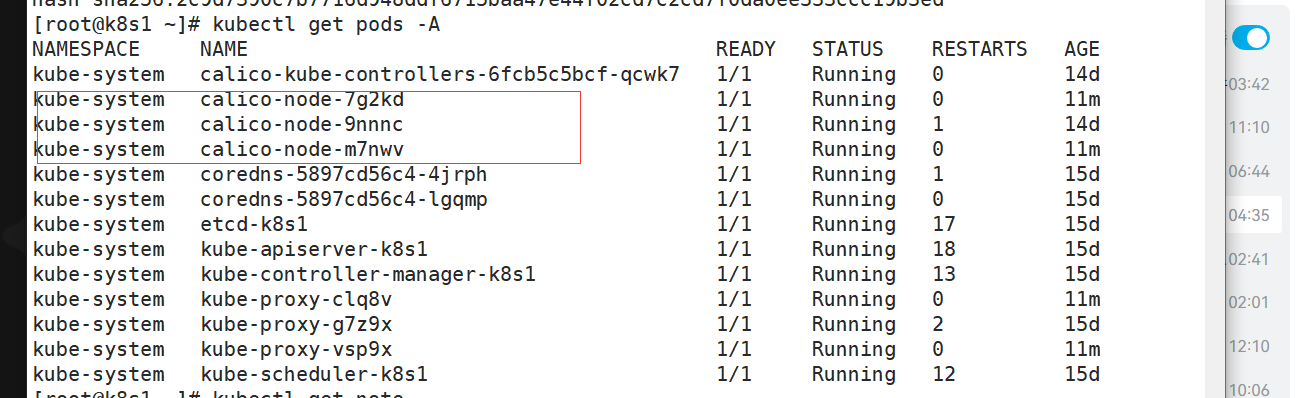

1.9 查看部署k8s所有集群

kubectl get pods -A

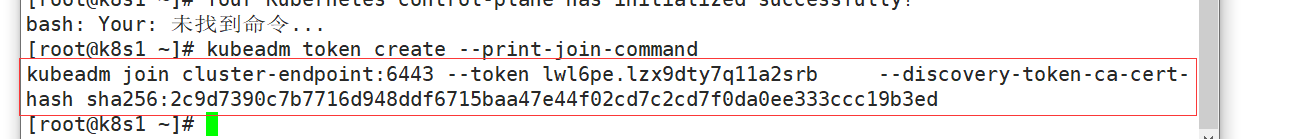

1.10 worker节点加入集群

执行这个命令,不过这个令牌只能24小时之内有效

如果超过24小时,需要重置令牌

kubeadm token create --print-join-command

执行命令,这个即为新令牌

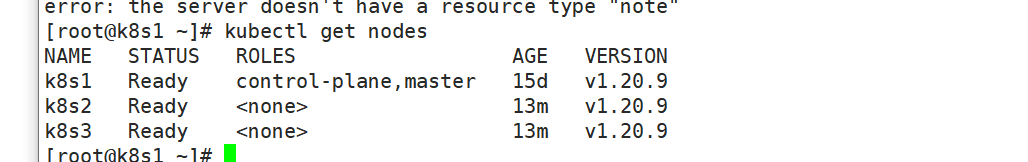

1.11 查看启动情况

成功启动

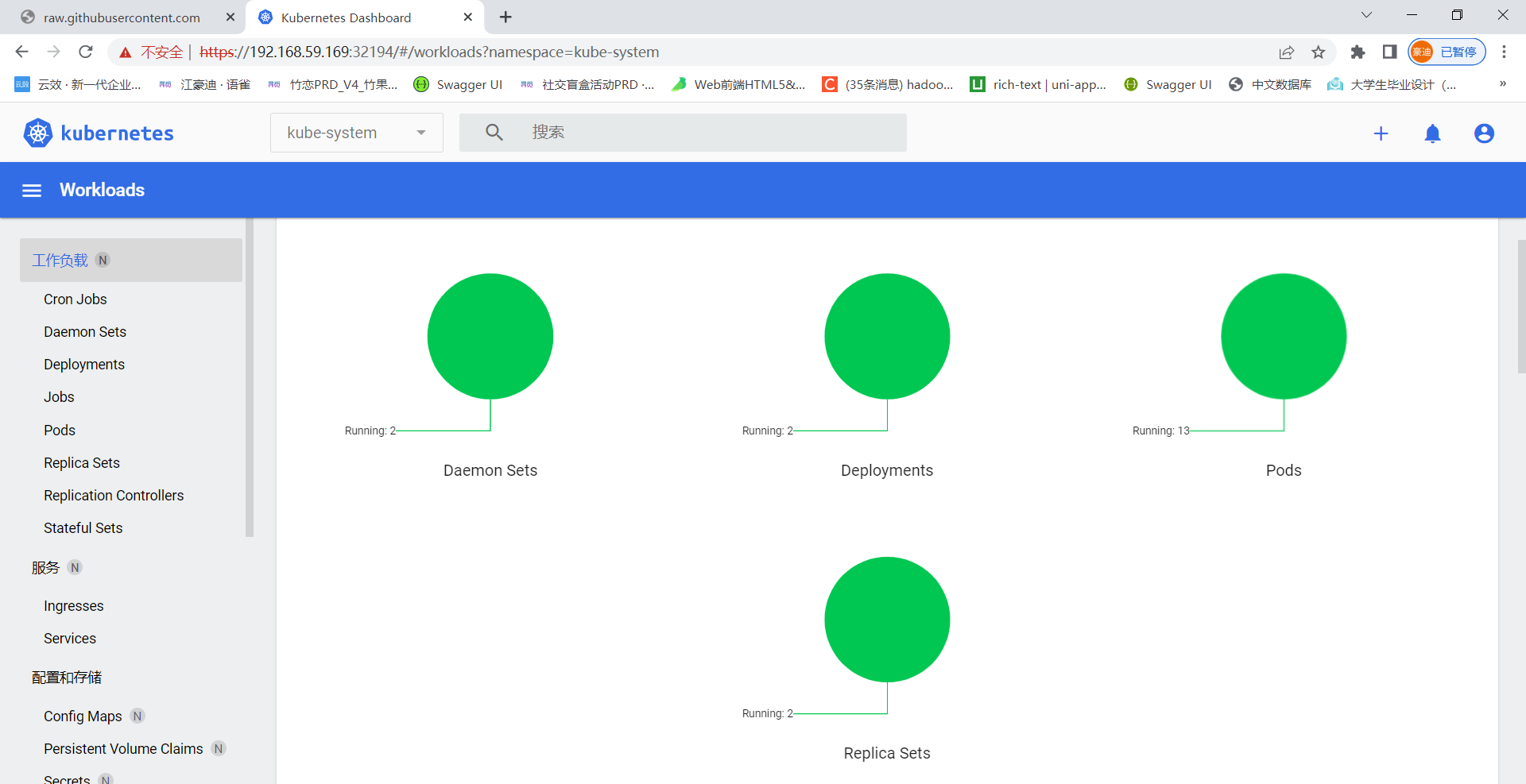

1.12 安装可视化界面

1.12.1 在~目录下面新建dashboard.yaml文件

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.3.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

1.12.2 应用yaml

kubectl apply -f dashboard.yaml

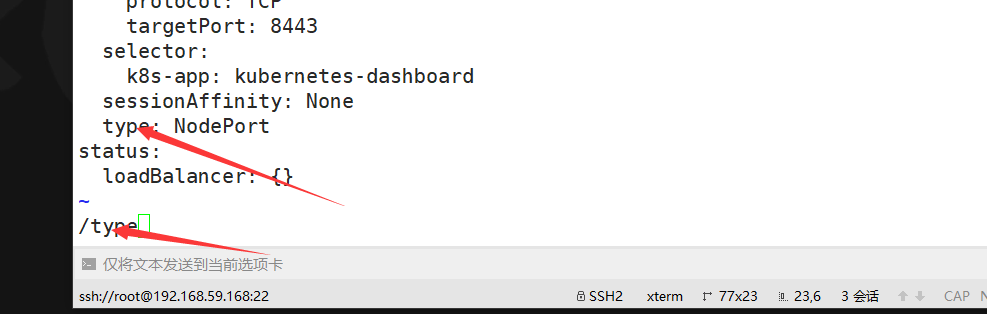

1.12.3 设置访问端口

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

找到type,修改成NodePort

1.12.4 找到端口,安全组放行

kubectl get svc -A |grep kubernetes-dashboard

## 找到端口,在安全组放行

访问: https://集群任意IP:端口 https://139.198.165.238:32759

1.12.5 创建访问账号

#创建访问账号,准备一个yaml文件; vi dash.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kubectl apply -f dash.yaml

#获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6InVzQTI2MDFlNTFHM2NYUGM0T05rTlhYV2tPc0NtMUU5VkNKZEVYOGcxczQifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW02bGRrIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhZjMzMWU0YS03ZTY4LTRmMzktOGQwZi0xZDgyMTFkZGMyYjgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.yrv-EZBJOcy4EyBpxJNP-FXxfDFgipKMusT3h6jIbdLeb9WPwQR44Qbuf8MIeCF9jYckoOegLjBn1dH3raIHLAb-dIlbl4hOVGQ9HyWDiPnkU5Lg0QySKQ617Eg1EBu3-3uXcDKEyB37Qx43LupEPnB4fHt7wBHg_8d8GPsE7gDpUE6FHhpbMq4u-gdodLRZW_aOdw-An801qQ7gGnLOs8vUL-_RyEoUsFNl8472yNc7qzg6M6DnQqfTrsFcqY8VN00G7BqIChjVPj7O2nw8ZwuElEudE-S20ZjY-Pn4qscygvNcHUbvFspXOht_FJlnLvtqyqXMw6DJtZtkSyeg9A

2 控制Pod

2.0 名称空间

kubectl create ns hello

kubectl delete ns hello

通过配置文件创建名称空间

1.创建yaml

2.编辑yaml

apiVersion: v1

kind: Namespace

metadata:

name: hello

3.kubectl apply -f hello.yaml

2.1 创建pod

kubectl run mynginx --image=nginx

# 查看default名称空间的Pod

kubectl get pod

# 描述

kubectl describe pod 你自己的Pod名字

# 删除

kubectl delete pod Pod名字

# 查看Pod的运行日志

kubectl logs Pod名字

#通过配置文件删除

kubectl delete -f xxx.yaml

# 每个Pod - k8s都会分配一个ip

kubectl get pod -owide

# 使用Pod的ip+pod里面运行容器的端口

curl 192.168.169.136

#使用部署创建容器,这时候一个挂了可以在别的机器创建

kubectl create deployment mytomcat --image=tomcat:8.5.68

#如果删除就需要使用kubectl delete deploy mytomcat来进行删除

2.2 多副本

kubectl create deployment my-dep –image=nginx –replicas=3

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: my-dep

name: my-dep

spec:

replicas: 3

selector:

matchLabels:

app: my-dep

template:

metadata:

labels:

app: my-dep

spec:

containers:

- image: nginx

name: nginx

2.3 扩缩容

kubectl scale --replicas=5 deployment/my-dep

kubectl edit deployment my-dep

#修改 replicas

2.4 滚动更新

kubectl set image deployment/my-dep nginx=nginx:1.16.1 --record

kubectl rollout status deployment/my-dep

2.5 版本回退

#历史记录

kubectl rollout history deployment/my-dep

#查看某个历史详情

kubectl rollout history deployment/my-dep --revision=2

#回滚(回到上次)

kubectl rollout undo deployment/my-dep

#回滚(回到指定版本)

kubectl rollout undo deployment/my-dep --to-revision=2

2.6 service

#暴露Deploy

kubectl expose deployment my-dep --port=8000 --target-port=80

#使用标签检索Pod

kubectl get pod -l app=my-dep

kubectl get service

2.7 ClusterIP

# 等同于没有--type的

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=ClusterIP

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: my-dep

type: ClusterIP

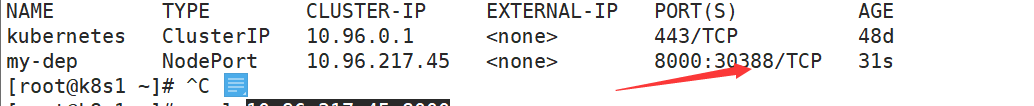

2.8 NodePort

nodePort就可以通过外网进行访问

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=NodePort

通过各个虚拟机ip的30388端口即可访问

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: my-dep

type: NodePort

2.9 ingress

service统一网关入口

配置ingress.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

kubectl get pod,svc -n ingress-nginx

#查看

2.9.1 ingress域名访问

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

2.9.2 路径重写

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

2.9.3 流量限制

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.atguigu.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

2.10 存储抽象

2.10.1 环境准备

所有节点:

#所有机器安装

yum install -y nfs-utils

主节点:

#nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

从节点:

#更改为主节点的ip地址

showmount -e 192.168.59.168

#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir -p /nfs/data

mount -t nfs 192.168.59.168:/nfs/data /nfs/data

# 写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

原生方式数据挂载

主节点创建目录/nfs/data/nginx-pv

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

nfs:

server: 192.168.59.168

path: /nfs/data/nginx-pv

2.10.2 pv-pvc

1.创建pv池

#nfs主节点

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 192.168.59.168

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 192.168.59.168

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 192.168.59.168

2 PVC的创建和绑定

创建pvc

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

创建pod绑定pvc

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

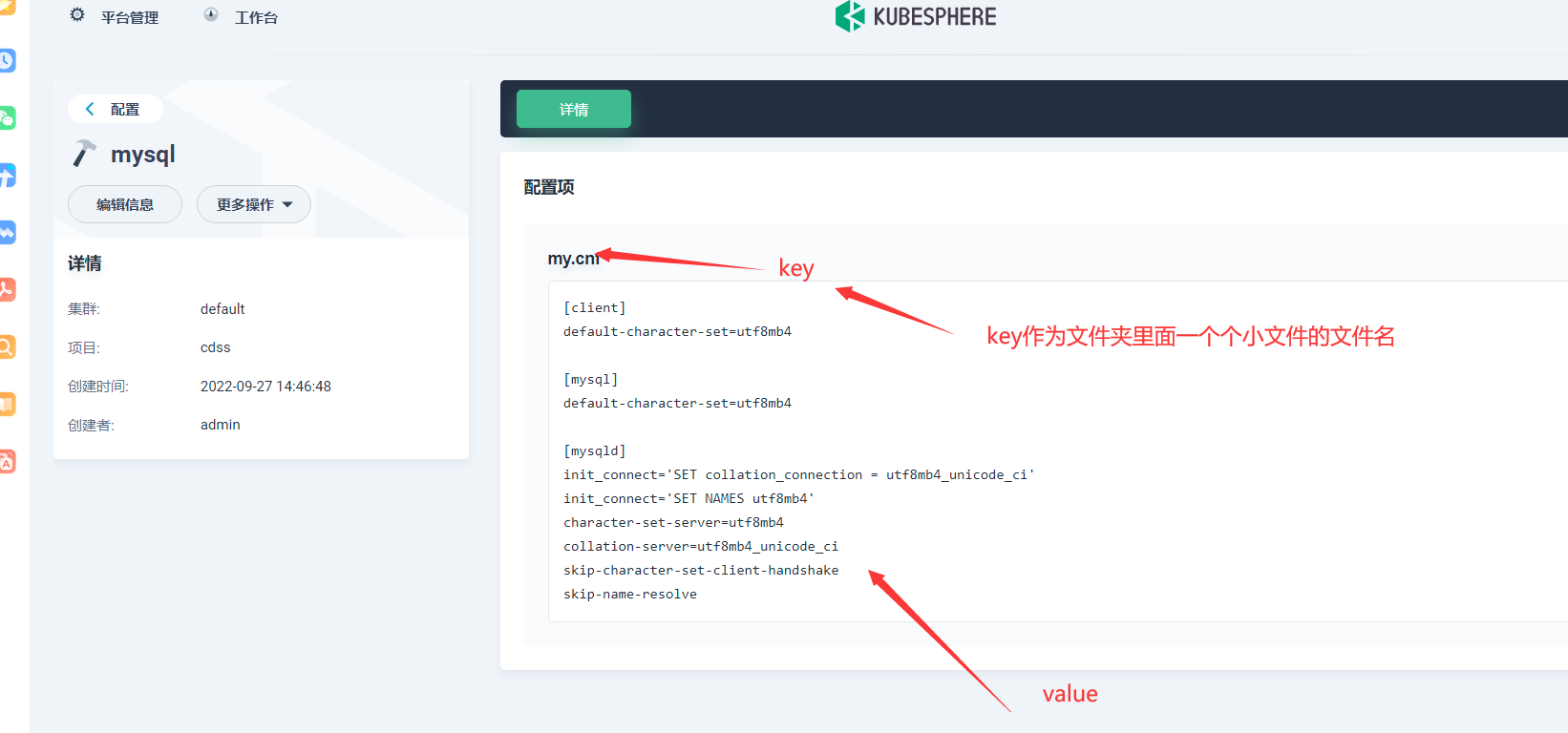

2.10.3 ConfigMap

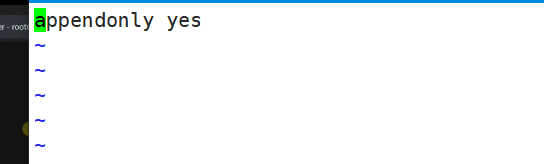

1 把之前的配置文件创建为配置集

创建一个配置文件redis.conf

# 创建配置,redis保存到k8s的etcd;

kubectl create cm redis-conf --from-file=redis.conf

kubectl get cm

#查看当前configMap

创建yaml文件,并用kubectl apply导入

apiVersion: v1

data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容

redis.conf: |

appendonly yes

kind: ConfigMap

metadata:

name: redis-conf

namespace: default

创建pod

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf" #指的是redis容器内部的位置

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

检查默认配置

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET appendonly

127.0.0.1:6379> CONFIG GET requirepass

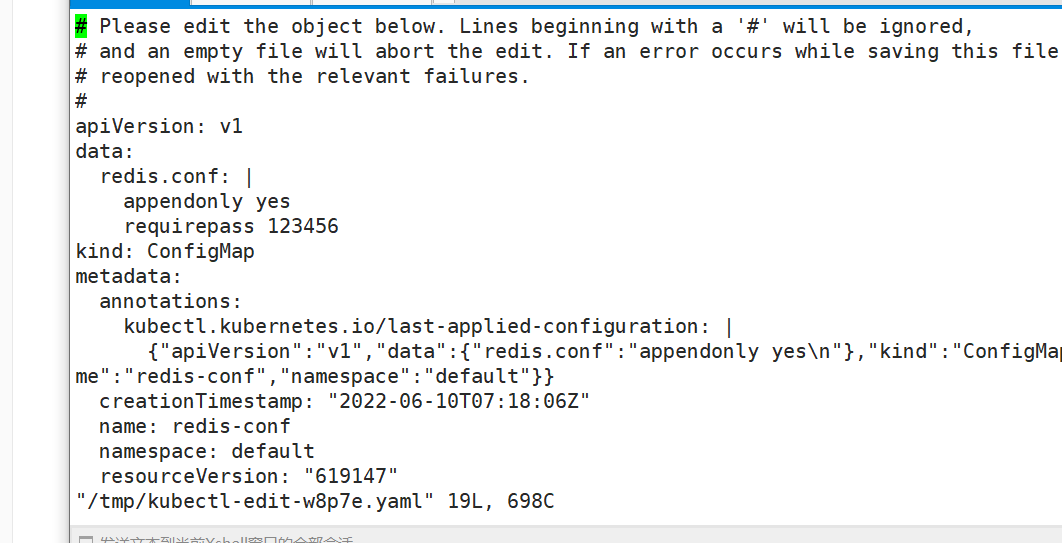

#修改redis.conf内容

kubectl edit cm redis-conf

然后查看,发现改变

2.11 secret

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

kubectl create secret docker-registry leifengyang-docker \

--docker-username=leifengyang \

--docker-password=Lfy123456 \

--docker-email=534096094@qq.com

##命令格式

kubectl create secret docker-registry regcred \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: leifengyang/guignginx:v1.0

imagePullSecrets:

- name: leifengyang-docker

3 快速搭建kubernetes

3.1 开通服务器

4c8g;centos7.9;防火墙放行 30000~32767;指定hostname

hostnamectl set-hostname node1

3.2 快速安装

官网教程

在 Linux 上以 All-in-One 模式安装 KubeSphere

步骤 1:准备 Linux 机器

若要以 All-in-One 模式进行安装,您仅需参考以下对机器硬件和操作系统的要求准备一台主机。

硬件推荐配置

| 操作系统 | 最低配置 |

|---|---|

| Ubuntu 16.04, 18.04, 20.04, 22.04 | 2 核 CPU,4 GB 内存,40 GB 磁盘空间 |

| Debian Buster, Stretch | 2 核 CPU,4 GB 内存,40 GB 磁盘空间 |

| CentOS 7.x | 2 核 CPU,4 GB 内存,40 GB 磁盘空间 |

| Red Hat Enterprise Linux 7 | 2 核 CPU,4 GB 内存,40 GB 磁盘空间 |

| SUSE Linux Enterprise Server 15/openSUSE Leap 15.2 | 2 核 CPU,4 GB 内存,40 GB 磁盘空间 |

依赖项要求

KubeKey 可以将 Kubernetes 和 KubeSphere 一同安装。针对不同的 Kubernetes 版本,需要安装的依赖项可能有所不同。您可以参考以下列表,查看是否需要提前在节点上安装相关的依赖项。

| 依赖项 | Kubernetes 版本 ≥ 1.18 | Kubernetes 版本 < 1.18 |

|---|---|---|

| socat | 必须 | 可选但建议 |

| conntrack | 必须 | 可选但建议 |

| ebtables | 可选但建议 | 可选但建议 |

| ipset | 可选但建议 | 可选但建议 |

安装依赖组件

也就是上面的Kubernetes依赖里的内容,全部安装

centos

yum install -y ebtables socat ipset conntrack

ubuntu

apt-get install -y ebtables socat ipset conntrack

基本配置要求

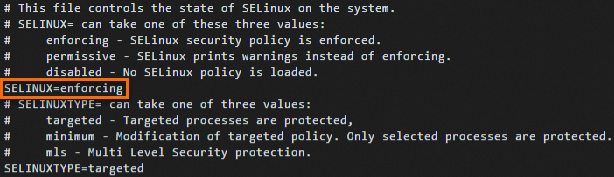

安装 k8s 之前需要关闭 SELinux、关闭 Swap 分区、关闭防火墙

关闭SELinux

centos

1.查看 selinux 状态

sestatus

若系统返回的参数信息SELinux status显示为disabled,则表示SELinux已关闭。

2.临时关闭SeLinux

setenforce 0

3.永久关闭SeLinux ,运行运行以下命令,编辑SELinux的config配置文件。

vi /etc/selinux/config

找到SELINUX=enforcing或SELINUX=permissive字段,按i进入编辑模式,将参数修改为SELINUX=disabled。

ubuntu

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinuxconfig

关闭防火墙

centos

# 停止防火墙服务

systemctl stop firewalld

# 禁用防火墙服务

systemctl disable firewalld

# 查看防火墙状态

systemctl status firewalld

ubuntu

# 停止防火墙服务

sudo systemctl stop ufw.service

# 禁用防火墙服务

sudo systemctl disable ufw.service

# 查看防火墙状态

sudo ufw status

如果输出:

Status: inactive,说明防火墙已经成功关闭!

关闭swap分区

centos

swapoff -a

echo "vm.swappiness=0" >> /etc/sysctl.conf

sysctl -p /etc/sysctl.conf

unbuntu

swapoff -a && sed -ri 's/.*swap.*/#&/' /etc/fstab

步骤 2:下载 KubeKey

从 GitHub Release Page 下载 KubeKey 或直接使用以下命令(ubuntu使用bash替换sh)。

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

备注 执行以上命令会下载最新版

KubeKey,您可以修改命令中的版本号下载指定版本。

为 kk 添加可执行权限:

chmod +x kk

步骤 3:开始安装

只需执行一个命令即可进行安装,其模板如下所示:

./kk create cluster [--with-kubernetes version] [--with-kubesphere version]

同时安装 Kubernetes 和 KubeSphere

./kk create cluster --with-kubernetes v1.22.12 --with-kubesphere v3.4.1

- 安装 KubeSphere 3.4 的建议 Kubernetes 版本:v1.20.x、v1.21.x、v1.22.x、v1.23.x、* v1.24.x、* v1.25.x 和 * v1.26.x。带星号的版本可能出现边缘节点部分功能不可用的情况。因此,如需使用边缘节点,推荐安装 v1.23.x。如果不指定

Kubernetes版本,KubeKey将默认安装Kubernetesv1.23.10。有关受支持的Kubernetes版本的更多信息,请参见支持矩阵。 - 一般来说,对于 All-in-One 安装,您无需更改任何配置。

- 如果您在这一步的命令中不添加标志

--with-kubesphere,则不会部署KubeSphere,KubeKey将只安装Kubernetes。如果您添加标志 --with-kubesphere时不指定KubeSphere版本,则会安装最新版本的KubeSphere。 KubeKey会默认安装 OpenEBS 为开发和测试环境提供 LocalPV 以方便新用户。对于其他存储类型,请参见持久化存储配置。

执行该命令后,KubeKey将检查您的安装环境,结果显示在一张表格中。有关详细信息,请参见节点要求和依赖项要求。输入yes 继续安装流程

步骤 4:验证安装结果

输入以下命令以检查安装结果。

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

输出信息会显示 Web 控制台的 IP 地址和端口号,默认的 NodePort 是 30880。现在,您可以使用默认的帐户和密码 (admin/P@88w0rd) 通过 :30880 访问控制台。

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.2:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 20xx-xx-xx xx:xx:xx

#####################################################

备注 您可能需要配置端口转发规则并在安全组中开放端口,以便外部用户访问控制台。

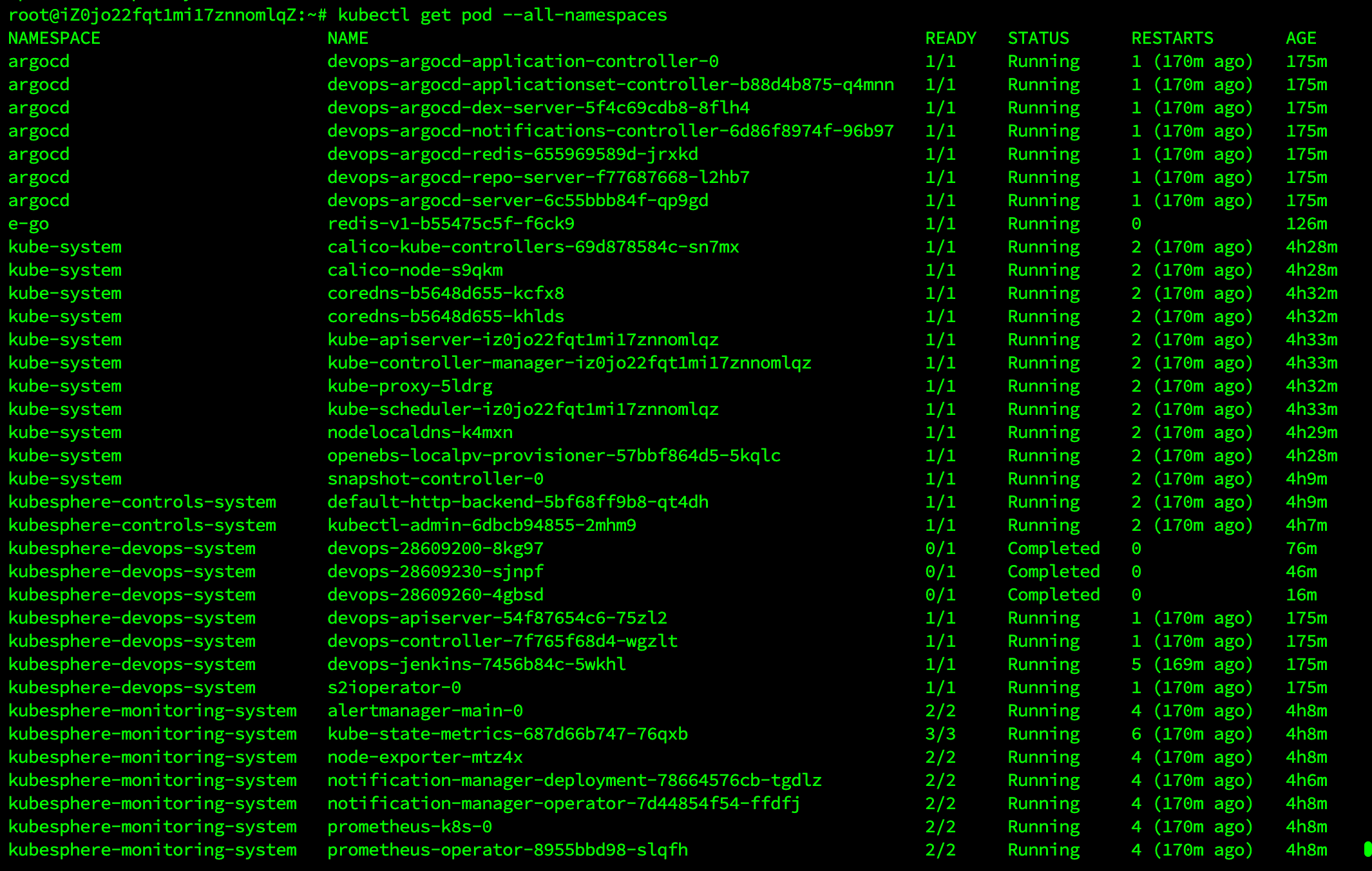

登录至控制台后,您可以在系统组件中查看各个组件的状态。如果要使用相关服务,您可能需要等待部分组件启动并运行。您也可以使用 kubectl get pod \--all-namespaces 来检查 KubeSphere 相关组件的运行状况。

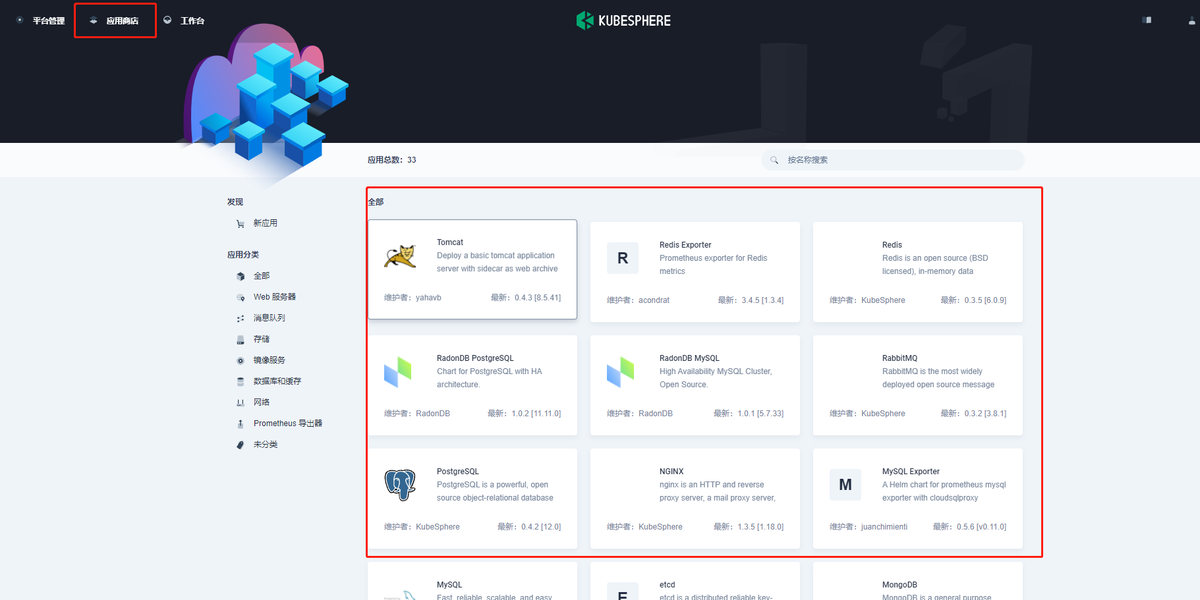

开启KubeSphere 应用商店

KubeSphere 应用商店

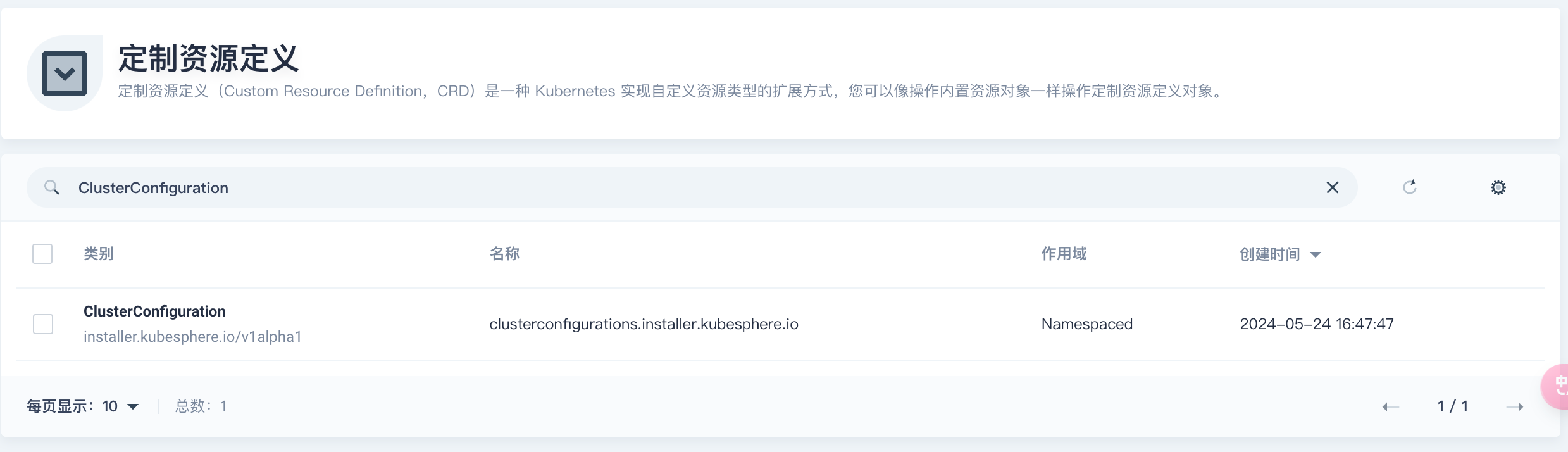

使用admin登录Kubersphere平台,进入集群管理页面,然后点击【定制资源定义】,在右边搜索框搜索ClusterConfiguration,如下,然后点击搜索到的结果

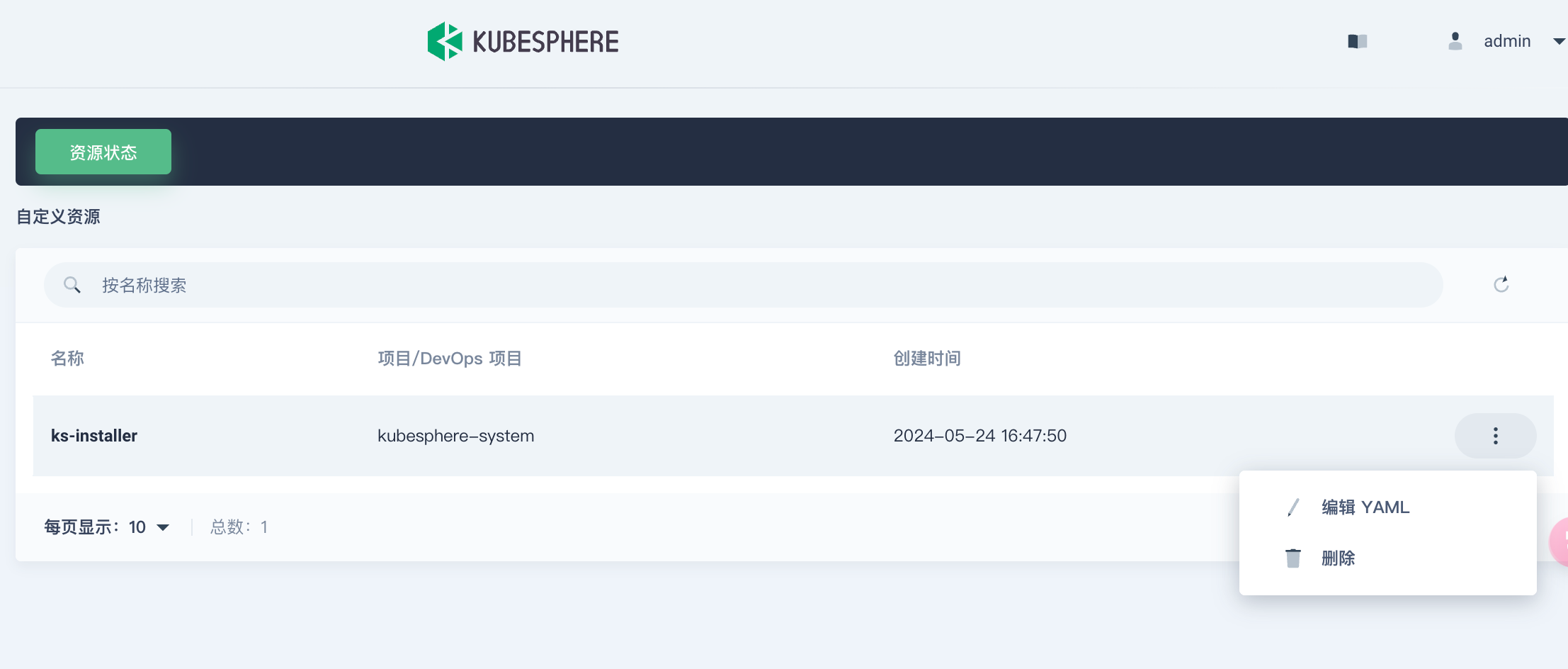

然后点击右边的三个点,在弹出的菜单中点击【编辑YAML】

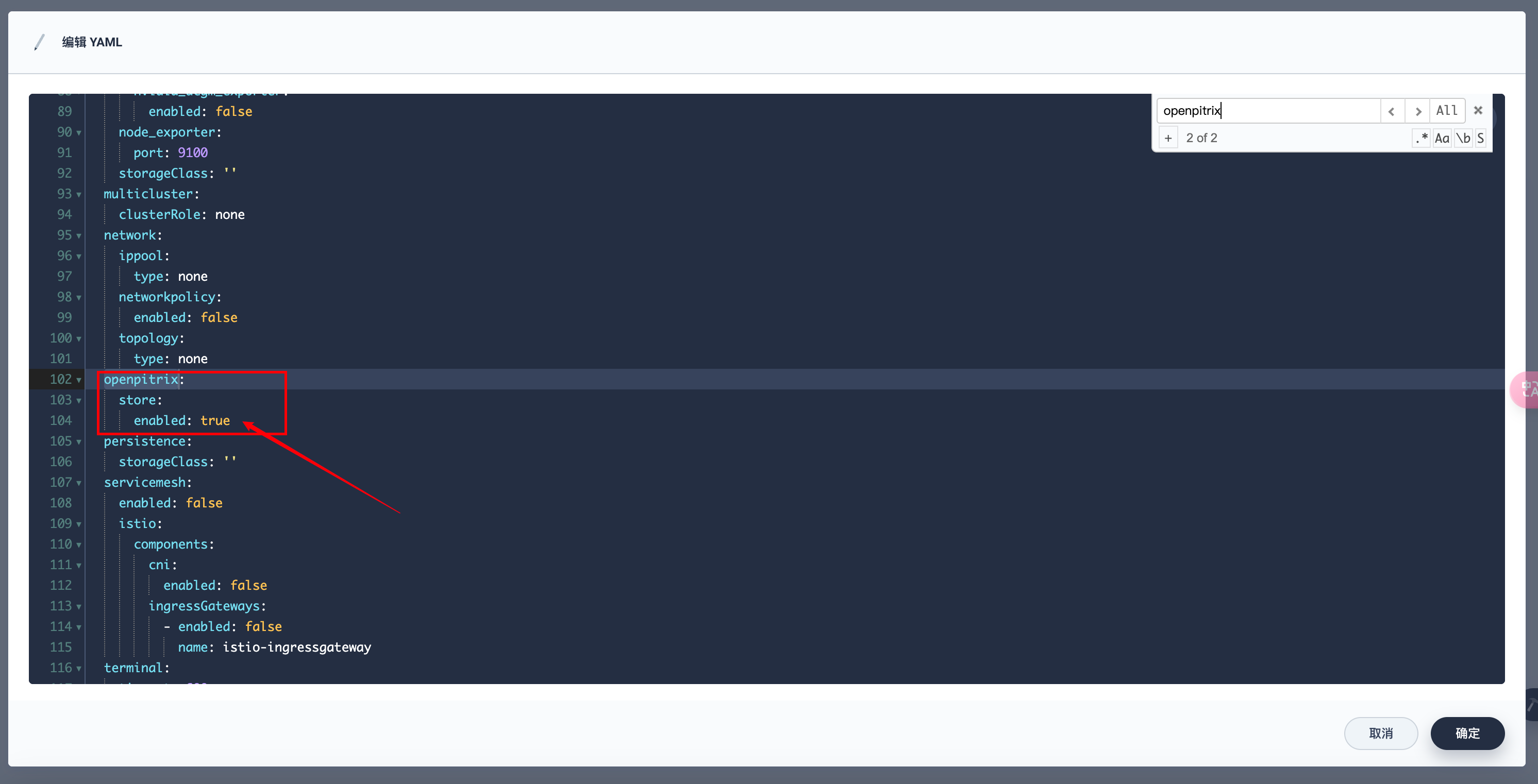

搜索 openpitrix,并将 enabled 的 false 改为 true,完成后保存

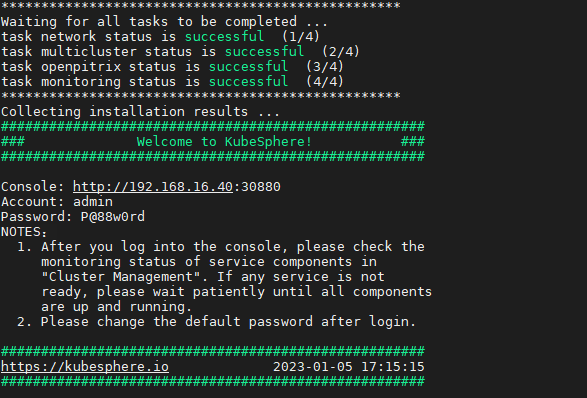

然后可以到kubernetes集群后台执行如下命令查看启用日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

安装日志出现如下内容,表示安装成功

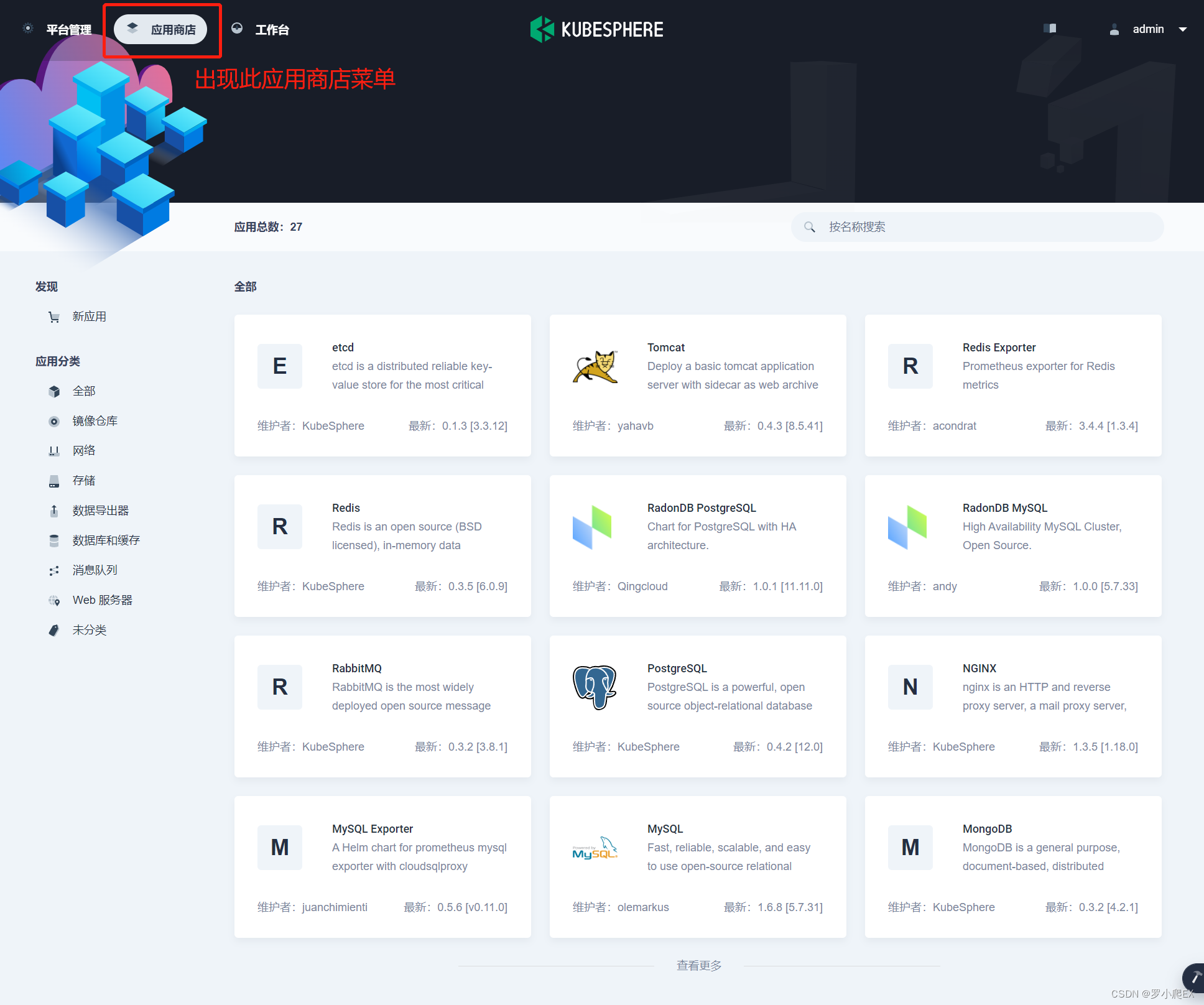

安装完成后,Kubersphere平台会显示应用商店,点击进去如下所示

也可以在不登录控制台的情况下直接访问 <节点 IP 地址>:30880/apps 进入应用商店。

如 http://192.168.3.120:30880/apps

且KubeSphere 3.2.x 中的应用商店启用后,OpenPitrix 页签不会显示在系统组件页面。

启用可插拔组件

概述

从 2.1.0 版本开始,KubeSphere 解耦了一些核心功能组件。这些组件设计成了可插拔式,您可以在安装之前或之后启用它们。如果您不启用它们,KubeSphere 会默认以最小化进行安装部署。

不同的可插拔组件部署在不同的命名空间中。您可以根据需求启用任意组件。强烈建议您安装这些可插拔组件来深度体验 KubeSphere 提供的全栈特性和功能。

资源要求

在您启用可插拔组件之前,请确保您的环境中有足够的资源,具体参见下表。否则,可能会因为缺乏资源导致组件崩溃。

⚠️ 注意 CPU 和内存的资源请求和限制均指单个副本的要求。

- KubeSphere 应用商店

- KubeSphere DevOps

- KubeSphere 日志系统

- KubeSphere 事件系统

- KubeSphere 告警系统

- KubeSphere 审计日志

- KubeSphere 服务网格

- 网络策略

- Metrics Server

- 服务拓扑图

- 容器组 IP 池

- KubeEdge

参考文献

Ubuntu关闭防火墙、关闭selinux、关闭swap_ubuntu 关闭selinux-CSDN博客

Llinux 开启或关闭SELinux - 飞行日志 - 博客园

安装部署KubeSphere管理kubernetes_error: pipeline[createclusterpipeline] execute fai-CSDN博客

使用kubekey部署k8s集群和kubesphere、在已有k8s集群上部署kubesphere-CSDN博客

Kubersphere—-Kubersphere启用应用商店_kubesphere开启应用商店-CSDN博客

Kubesphere应用商店_kubesphere开启应用商店-CSDN博客

kubernetes之5—kubesphere管理平台 - 原因与结果 - 博客园

相关视频

云原生Java架构师的第一课K8s+Docker+KubeSphere+DevOps

3.3 通过minikube安装

安装 Minikube

Minikube 是一个单文件的二进制包,安装十分简单,在已经完成Docker 安装的前提下,使用以下命令可以下载并安装最新版的 Minikube。

curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

安装 Kubectl 工具

Minikube 中很多提供了许多子命令以代替 Kubectl 的功能,安装 Minikube 时并不会一并安装 Kubectl。但是 Kubectl 作为集群管理的命令行,要了解 Kubernetes 是无论如何绕不过去的,通过以下命令可以独立安装 Kubectl 工具。

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/am

启动 Kubernetes 集群

有了 Minikube,通过 start 子命令就可以一键部署和启动 Kubernetes 集群了,具体命令如下:

minikube start --iso-url=https://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/iso/minikube-v1.6.0.iso --registry-mirror=https://registry.docker-cn.com --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --vm-driver=none --memory=2200mb

然后看报错,需要什么安装什么

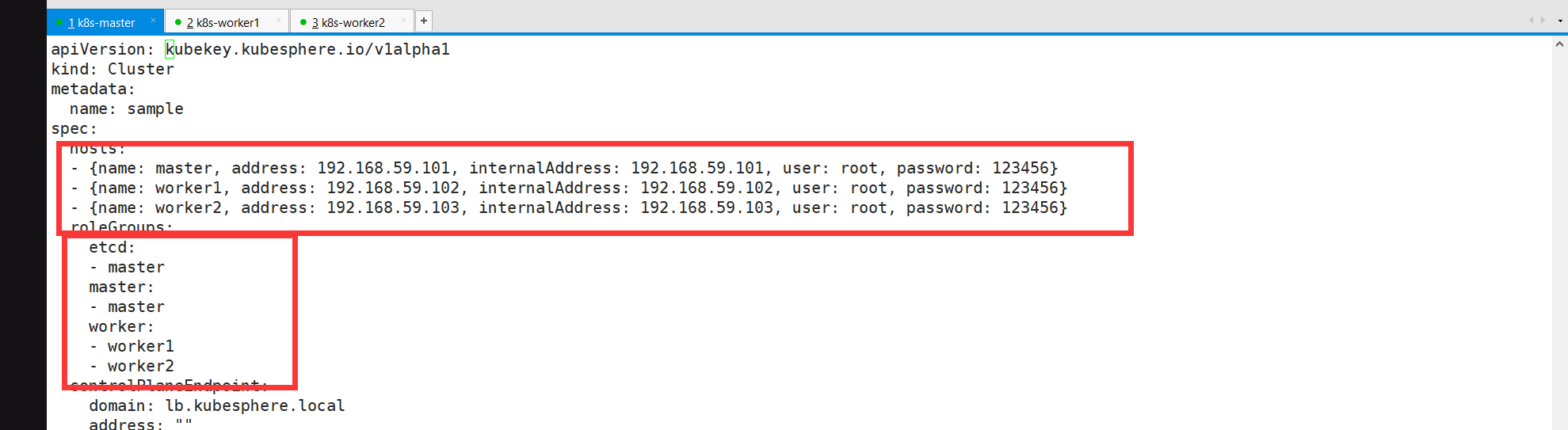

4 快速搭建kubernetes集群

4.1 修改linux主机名

hostnamectl set-hostname master

4.2 在master节点下载KubeKey

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh -

chmod +x kk

4.3 创建集群配置文件

./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1

修改配置

4.4 创建集群

./kk create cluster -f config-sample.yaml

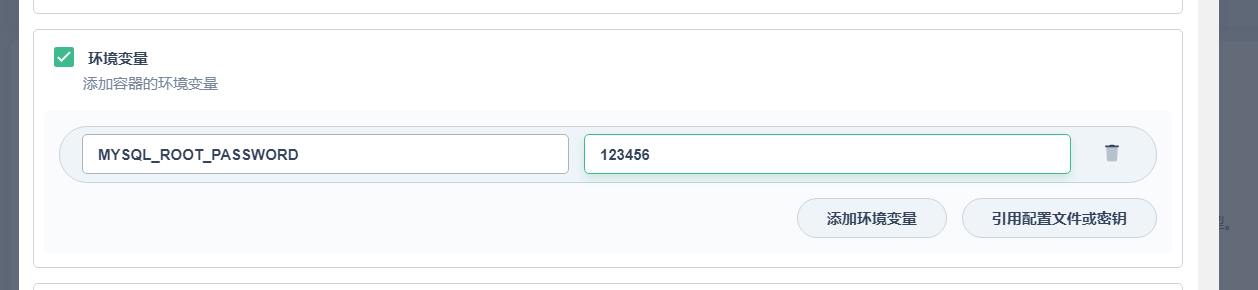

5 使用kubephere创建mysql

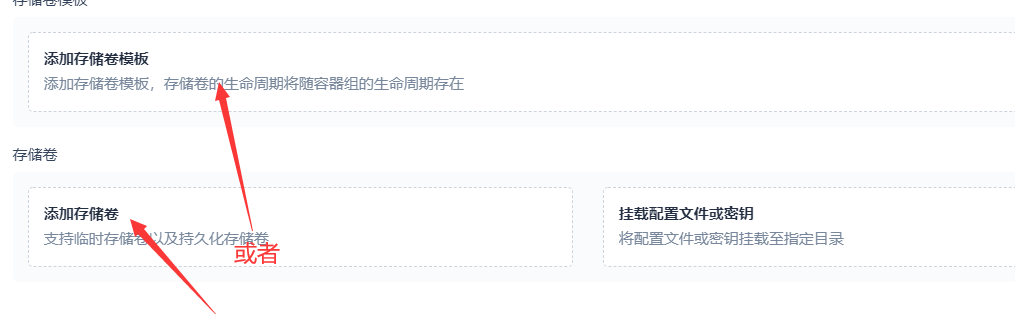

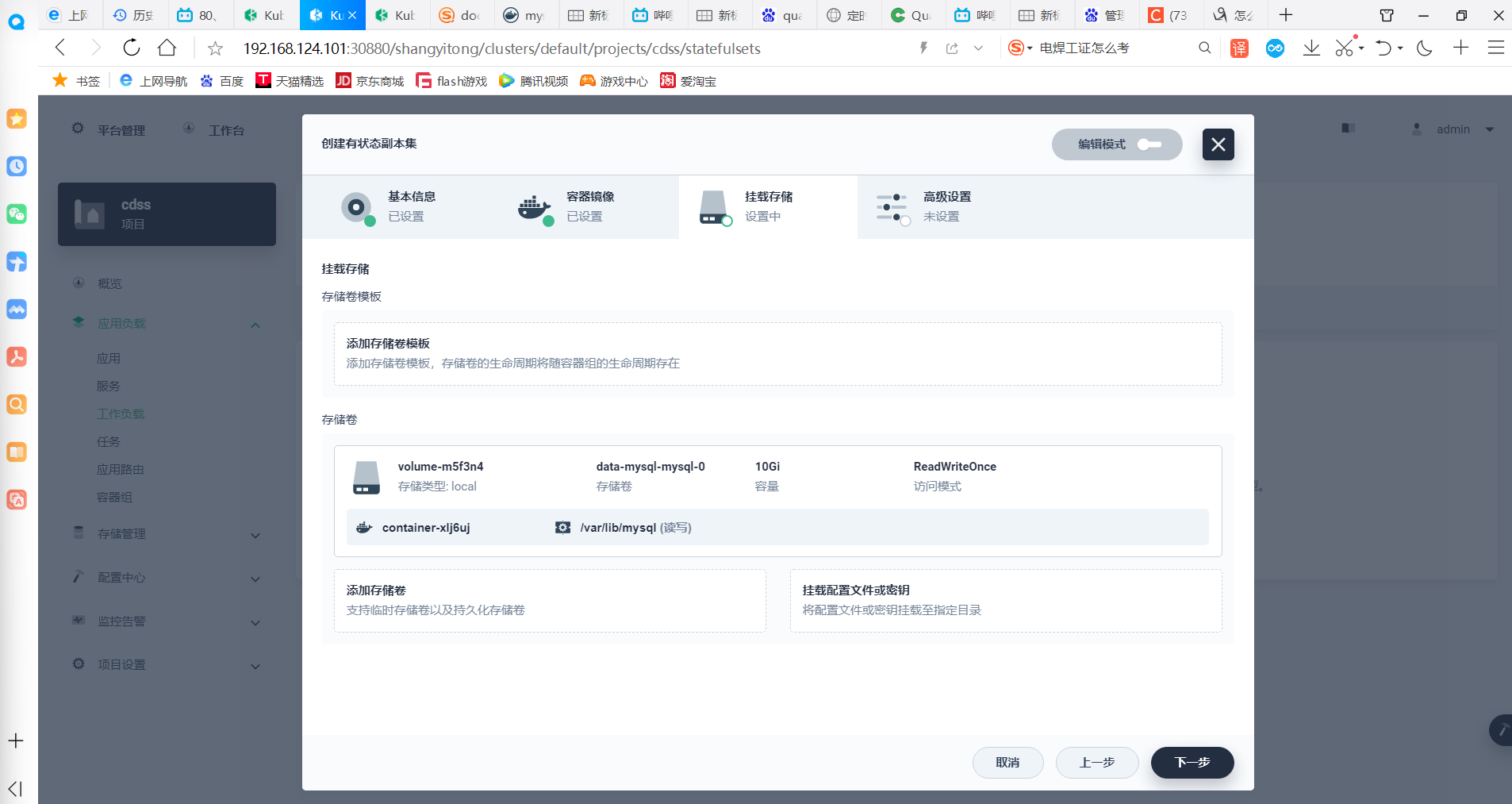

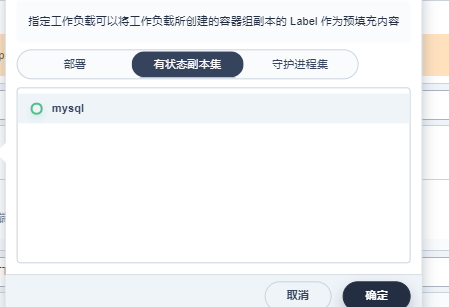

5.1 设置配置

5.2 创建mysql副本

下一步

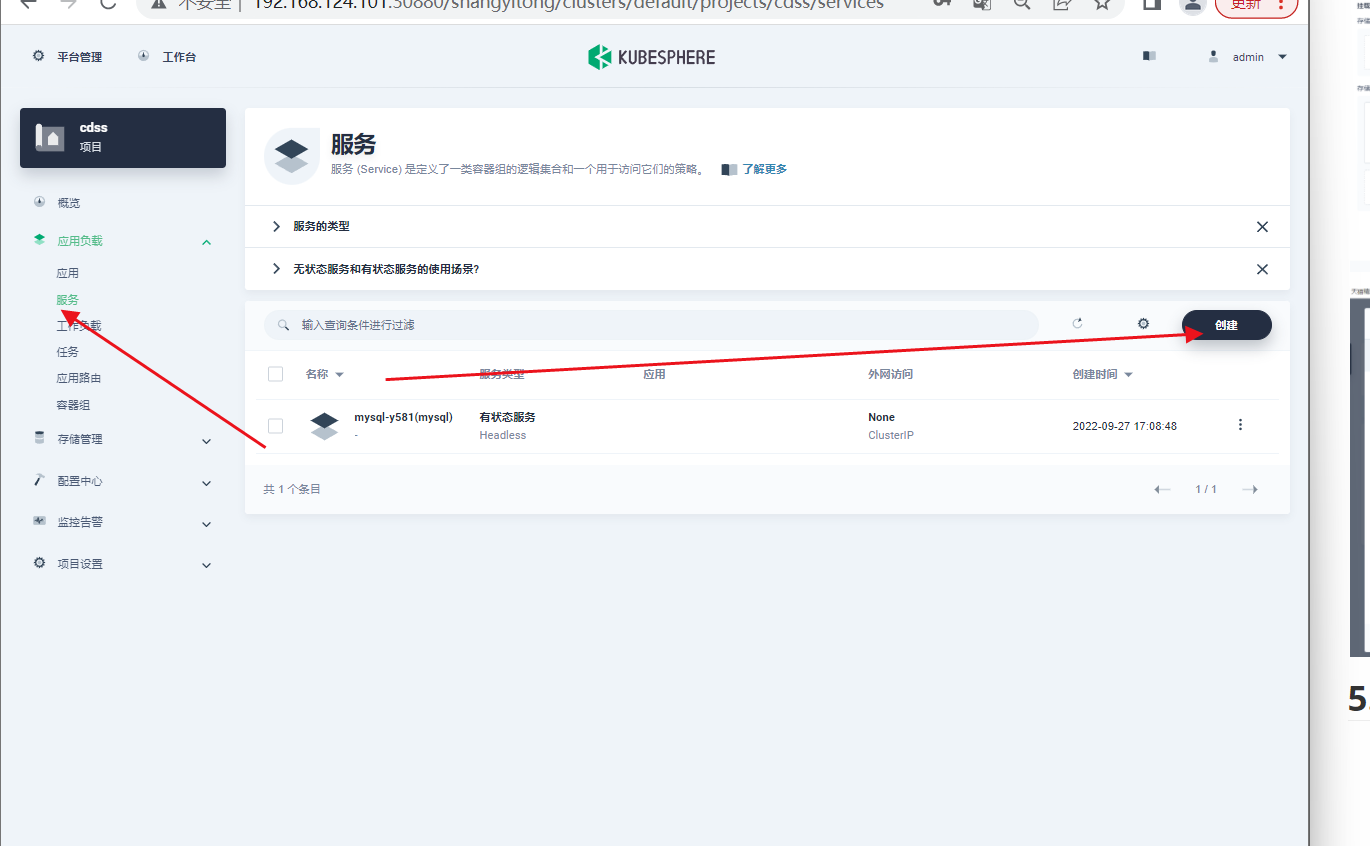

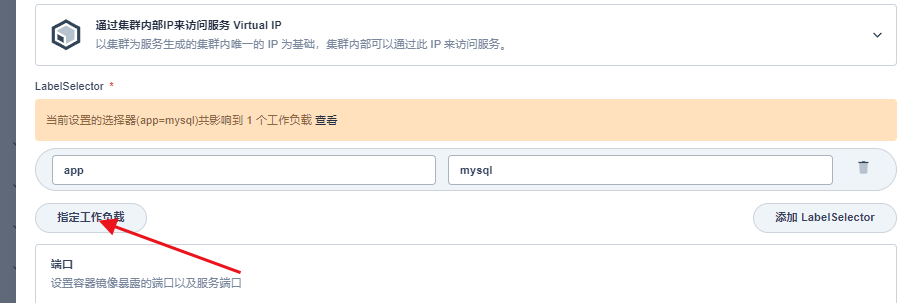

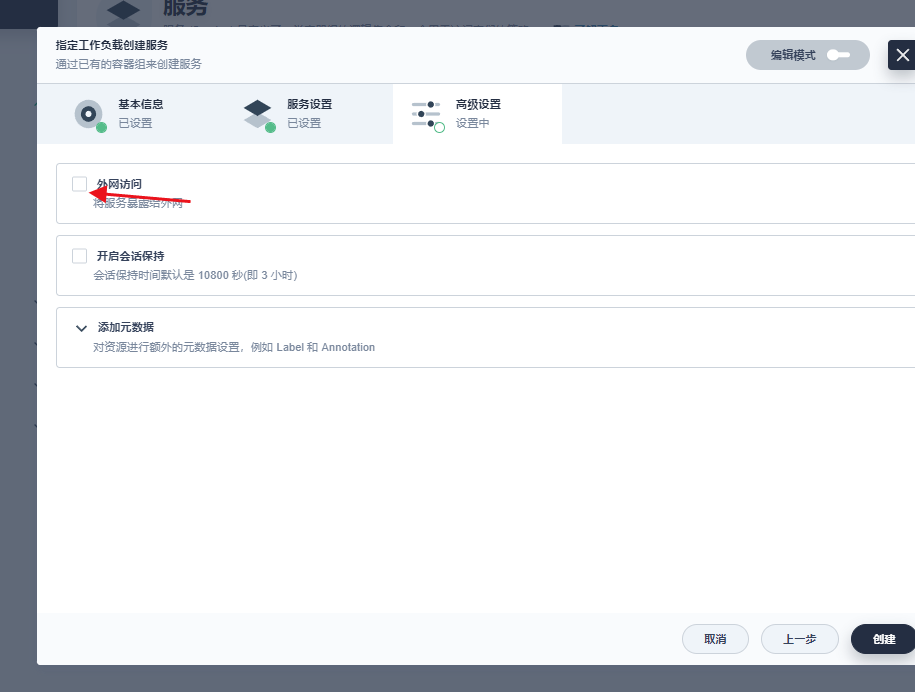

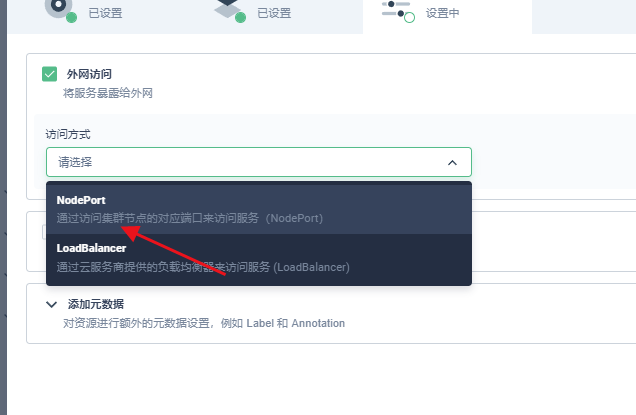

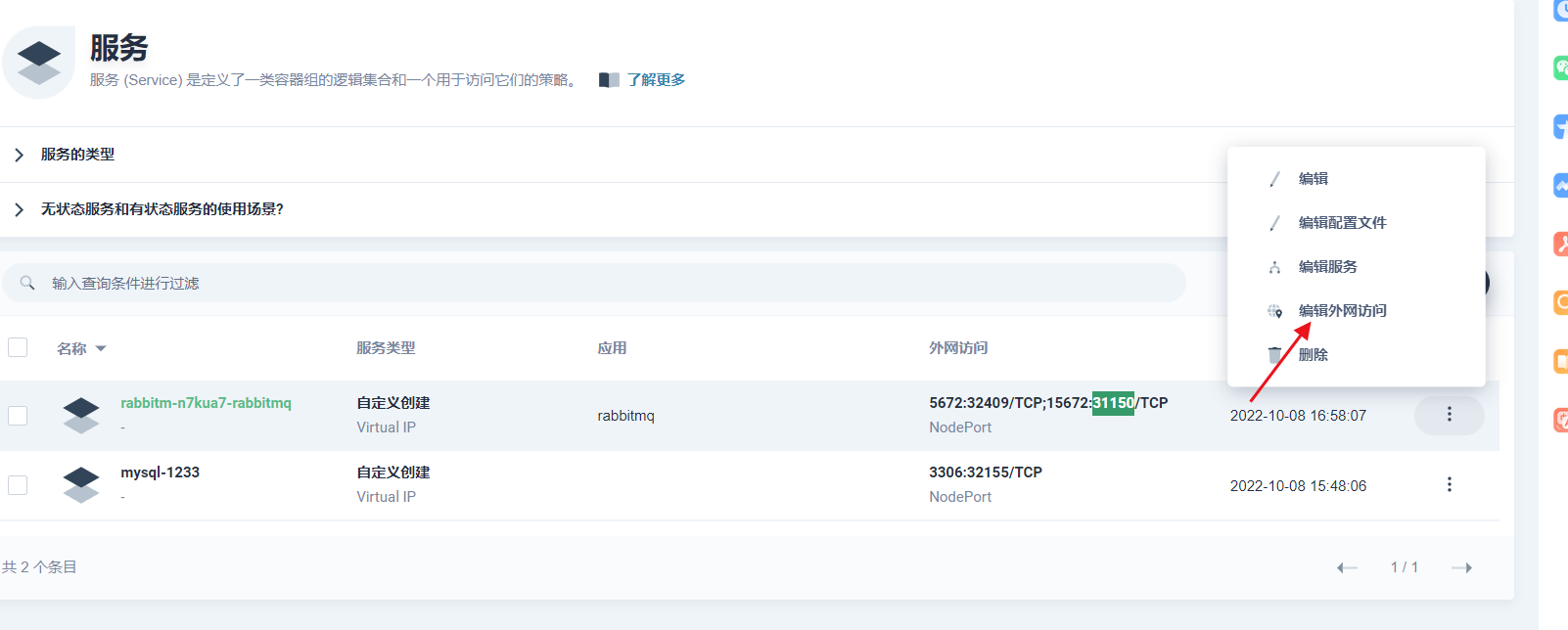

5.3 开放集群访问

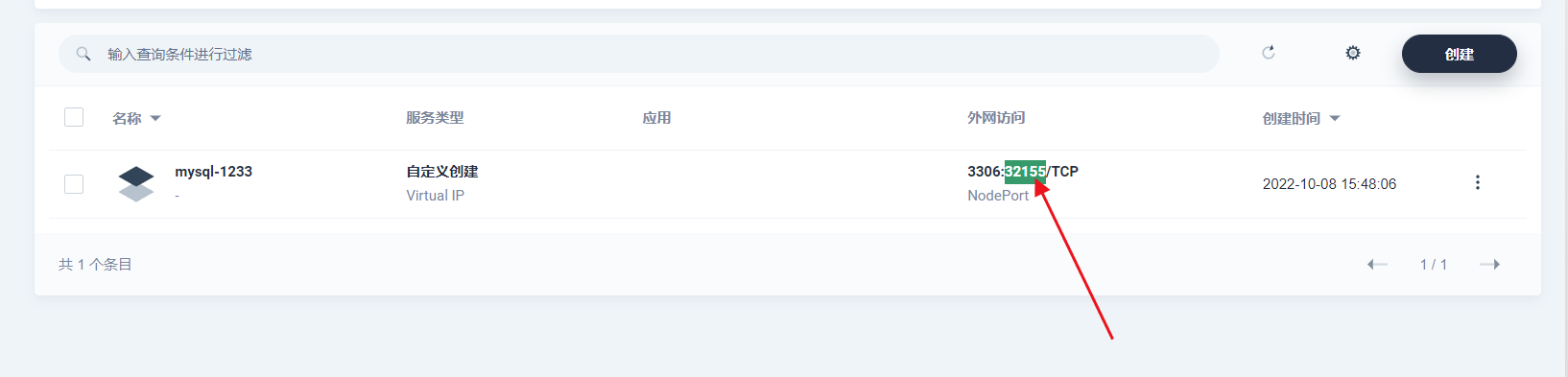

点击创建就创建成功

然后通过此端口就可外网访问

6 通过应用商店部署rabbitmq

6.1 kebesphere开放应用商店

已安装过KS通过管理界面进行设置 若之前已安装过KS,则可通过KS管理界面左侧菜单CRD -> 搜索clusterconfiguration -> 然后编辑其下资源ks-installer,如下图:

同样设置openpitrix.store.enabled为true:

Kubesphere 应用商店详细开启说明可参见: https://kubesphere.io/zh/docs/pluggable-components/app-store/

6.2 应用商店找到rabbitmq

6.3 添加外网访问

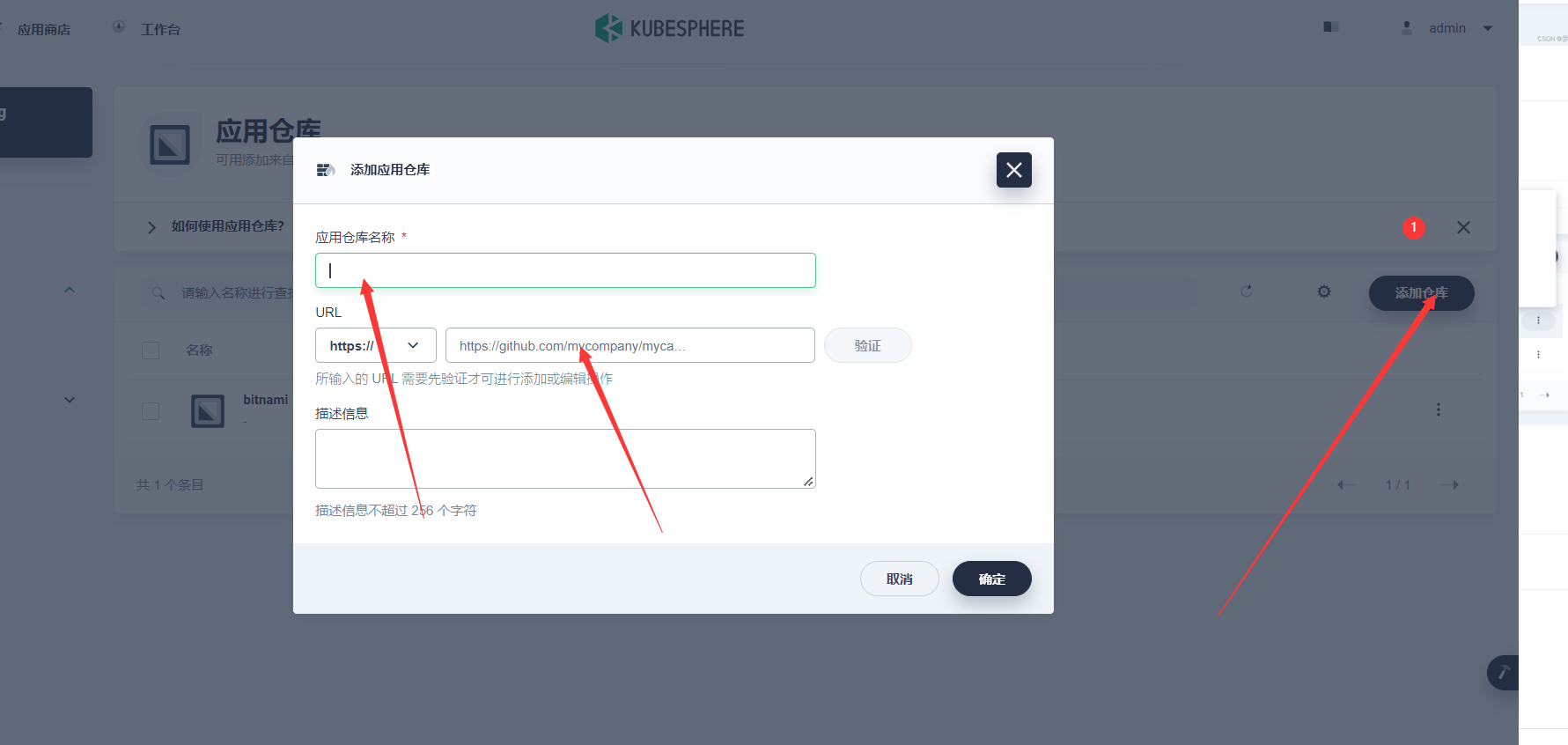

7 从应用仓库添加Zookeeper

7.1 添加应用仓库

7.2